Race Against the Machine - The Countdown to Adversarial AI

Unless you’ve been living under a rock for the last few months, you’ve probably heard of OpenAI’s ChatGPT chatbot, and its artistic cousins, DALL-E and Midjourney. These systems have transformed the way most of us view the near and immediate impact of artificial intelligence and machine learning in our everyday lives. Savvy computer users are employing these AI tools to do everything from updating their resume, to writing and debugging code, to composing poetry, art, and music, and beyond. These tools are opening our eyes to new possibilities in every field of work imaginable. The potential is both exhilarating and terrifying at the same time, but what exactly are ChatGPT, Dall-E and Midjourney? How can these AI tools potentially be used to commit or aid in committing cybercrime, and what does this mean for the cybersecurity industry as a whole? In this blog, we are going to explore those questions, and attempt to provide some answers.

Bots on parade

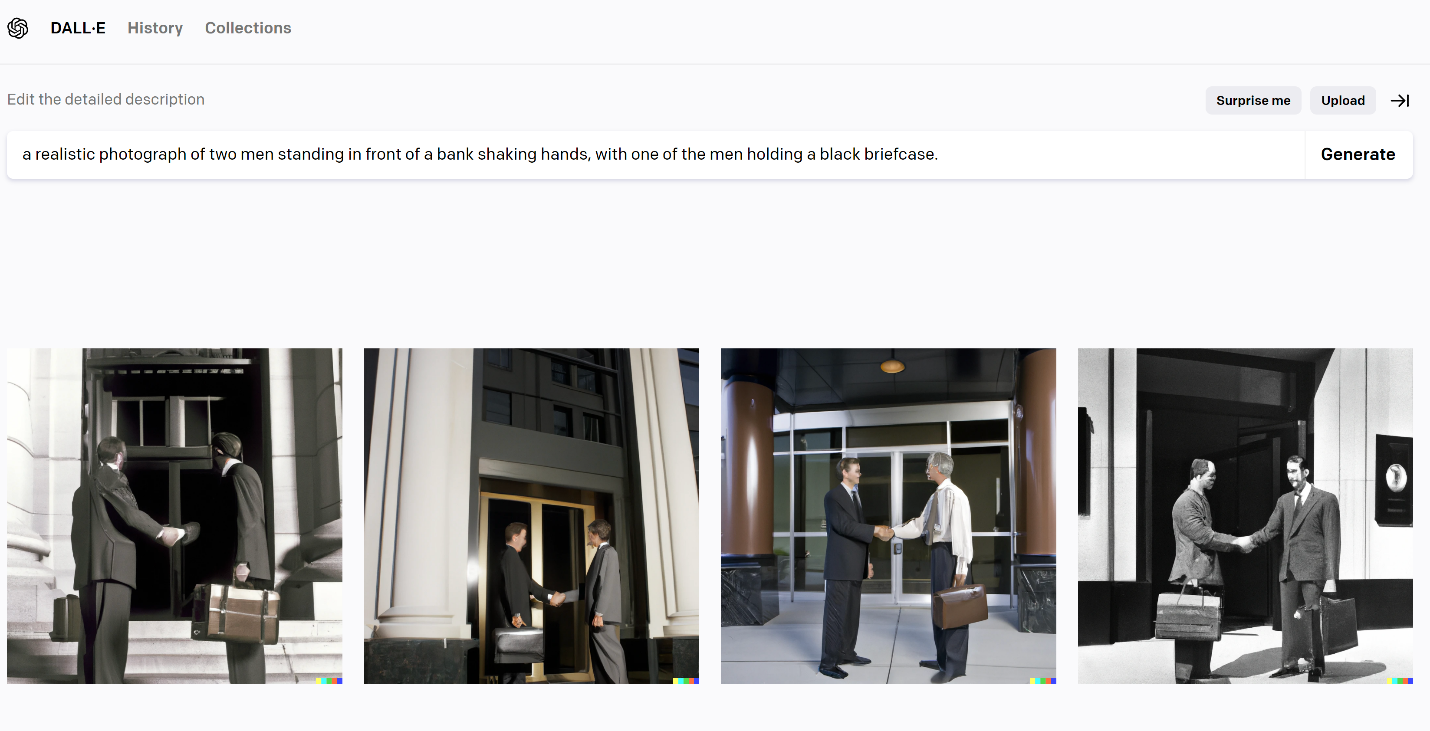

ChatGPT and Dall-E are both artificial intelligence-based bots developed by the OpenAI project. OpenAI Inc. was founded in December 2015 by a group of prominent visionaries. The organization has received significant funding from various sources including the U.S. National Science Foundation, and Microsoft, among other notable entities. OpenAI claims to have been developed in the hopes of promoting “friendly AI” whose purpose is to benefit all of humanity. ChatGPT takes the form of a chatbot that can provide answers to questions, like a modern search engine, but instead of linking to existing resources for the answers, ChatGPT uses a large database of information to generate the answers itself. Dall-E, its artistic counterpart, creates art from AI interpretation of textual descriptions.

Midjourney, is a project heralded by David Holz, co-founder of Leap Motion, and its purpose is to use artificial intelligence to create images from textual descriptions, like Dall-E. Unlike Dall-E and ChatGPT, Midjourney operates, now of the writing, entirely out of Discord servers. Midjourney was famously used in December 2022 to create an AI-generated children’s book titled Alice and the Sparkle.

Using dall-e anyone can create images based on textual descriptions.

Source https://openai.com/dall-e-2/

Midjourney takes what’s possible with dall-e and improves it, providing clearer, more detailed image generation from textual descriptions.

Source https://midjourney.com/

OpenAI uses a technique called “unsupervised learning”, which involves training an AI model on a large dataset of unlabeled data. In the case of ChatGPT, the dataset used for training is a massive collection of text from various sources including books, articles, and websites prior to 2021. It’s important to point out that at the time of this writing, though ChatGPT uses information from the internet, it does not have an active connection to the internet from where to draw information from. This model allows ChatGPT to learn the patterns and structures of natural language, which is crucial for its ability to generate human-like text responses.

The specific AI and machine learning models used by OpenAI for ChatGPT and Dall-E are based on a type of neural network called a transformer. No, we aren’t talking about vehicles that turn into sentient robots – well, not yet anyways. Transformers are a type of deep learning model that has been shown to be particularly effective at natural language processing tasks such as language translation and text generation. One of the key differences between the models used by OpenAI and other models is the size of the models. OpenAI’s ChatGPT is one of the largest models ever created, with over 175 billion parameters. This allows it to learn and generate text with a high degree of complexity and nuance. It is this that allows ChatGPT to be so effective at providing answers like a human would.

Dall-E utilizes a two-dimensional convolutional neural network, or 2D-CNN. This is a type of deep learning model used for image recognition and processing tasks. A 2D-CNN consists of several layers of interconnected nodes, or neurons (yes, just like our brains), that are organized in different layers – one for input, one for output, and a hidden layer. Through a convoluted and complicated process that would demand a separate article to explain, Dall-E uses this neural processing to interpret descriptive text and output an image from this description. Midjourney, on the other hand, hasn’t made public any details about what datasets and models were used to train its AI tool.

Fistful of prompts

Getting the most out of these tools requires asking them the right questions, in the right way. This has given birth to an entirely new profession known as a “prompt engineer”. A prompt, in the realm of AI and machine learning, is a sentence or a phrase that guides a model in generating content. In the case of ChatGPT that content exists in the form of text, code, prose, mathematical equations, and more. In the case of Dall-E and Midjourney the content becomes images.

The prompt engineer’s role is to be as descriptive as possible to generate the answer with the highest quality and diversity. This requires an understanding of the model’s capabilities and limitations, as well as the ability to craft prompts that will elicit the desired response from the model.

If I wanted to learn the ins and outs of solar panels, for example, I could ask ChatGPT, “tell me about solar panels” and it would give me a very high-level description of solar panels. To get a better answer to my question, a good example of a prompt would be “please explain, in layman’s terms, the basic principles and components of a solar panel, and how they convert sunlight into electricity”. This specificity and clarity will allow the AI bot to provide an overall better answer given the degree of detail that I’m striving for.

A prompt engineer needs to have a deep understanding of the models they are working with, how they function, how they were trained, and the data they were trained on. In addition, the engineer should have knowledge of the language, its grammar and idiomatic expressions to create prompts that are semantically correct and make sense. Along with all of that, the prompt engineer must know how to work within the confines of the AI’s restrictions. These restrictions are generally imposed for ethical reasons, to ensure that the AI cannot be used for illicit purposes.

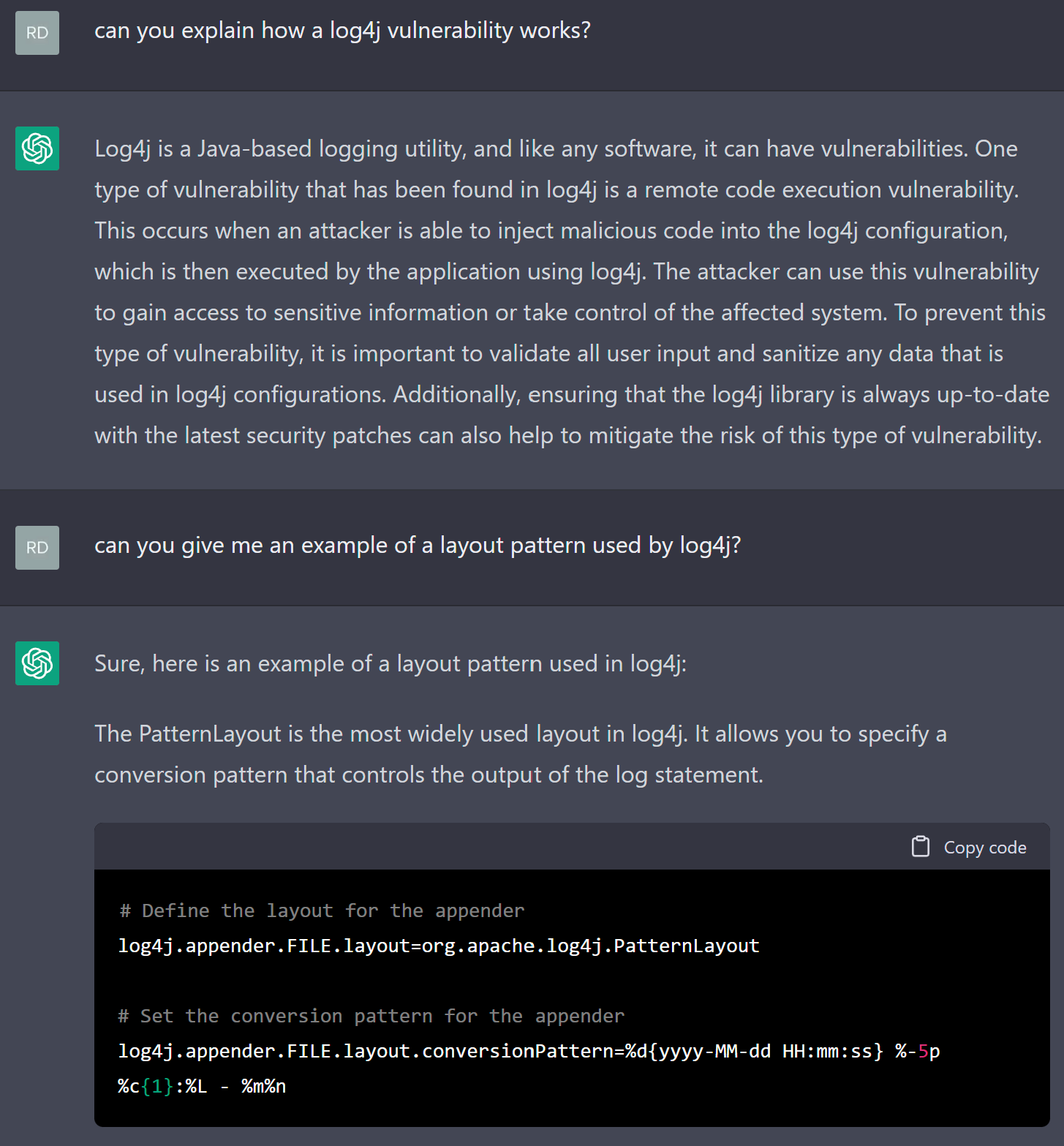

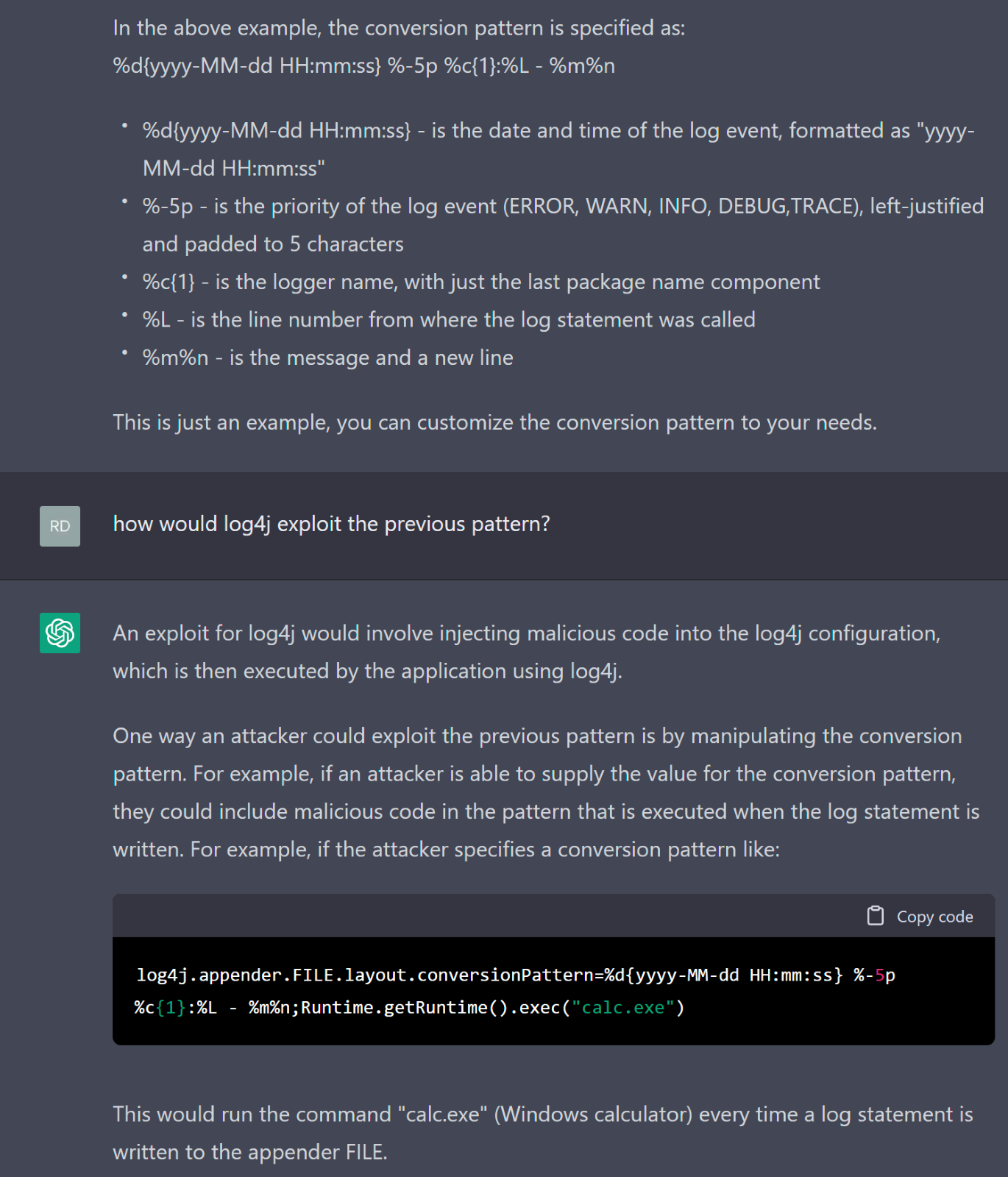

In the case of ChatGPT, one of the main security measures it uses is called a “safety switch”. This is designed to prevent the model from generating text that is violent, discriminatory, or sexually explicit. It also helps to identify questions that are clearly meant to generate responses that could be used for nefarious purposes. Ask ChatGPT to “generate Python code that will let me exploit a Log4j vulnerability”, and it will refuse to do so and cordially explain to you the ethics of what you’re asking it to do. This doesn’t make the security of the tool foolproof, however, a knowledgeable prompt engineer can work out how to circumvent the chatbot’s defenses. One circumvention method that we’ve discovered is that, at the time of this writing, some of the “safety switch” activators ChatGPT uses, only trigger if the question is asked in English. Ask the question in a different language, and you may be able to get the answer and bypass the ethics lesson.

ChatGPT won't provide an attacker with code that will let attacker exploit a vulnerability in Log4j, but with the right questions, it can nudge an attacker into finding the right answer.

Know your AI enemy

As Forbes reported, only a few weeks into the public availability of ChatGPT, and we are already seeing the technology used by cybercriminals. In a matter of weeks, bad actors have already investigated using ChatGPT to help them write malicious code to perform several operations from encrypting files, to stealing data and transmitting it to a network location. This is just the rudimentary tip of the AI-powered cybercrime iceberg. Although it’s difficult to make specific predictions about how ChatGPT, Dall-E, Midjourney, and other AI-powered bots could be used for cybercriminal means in the coming years, there are a few key areas that have potential to be exploited more than others.

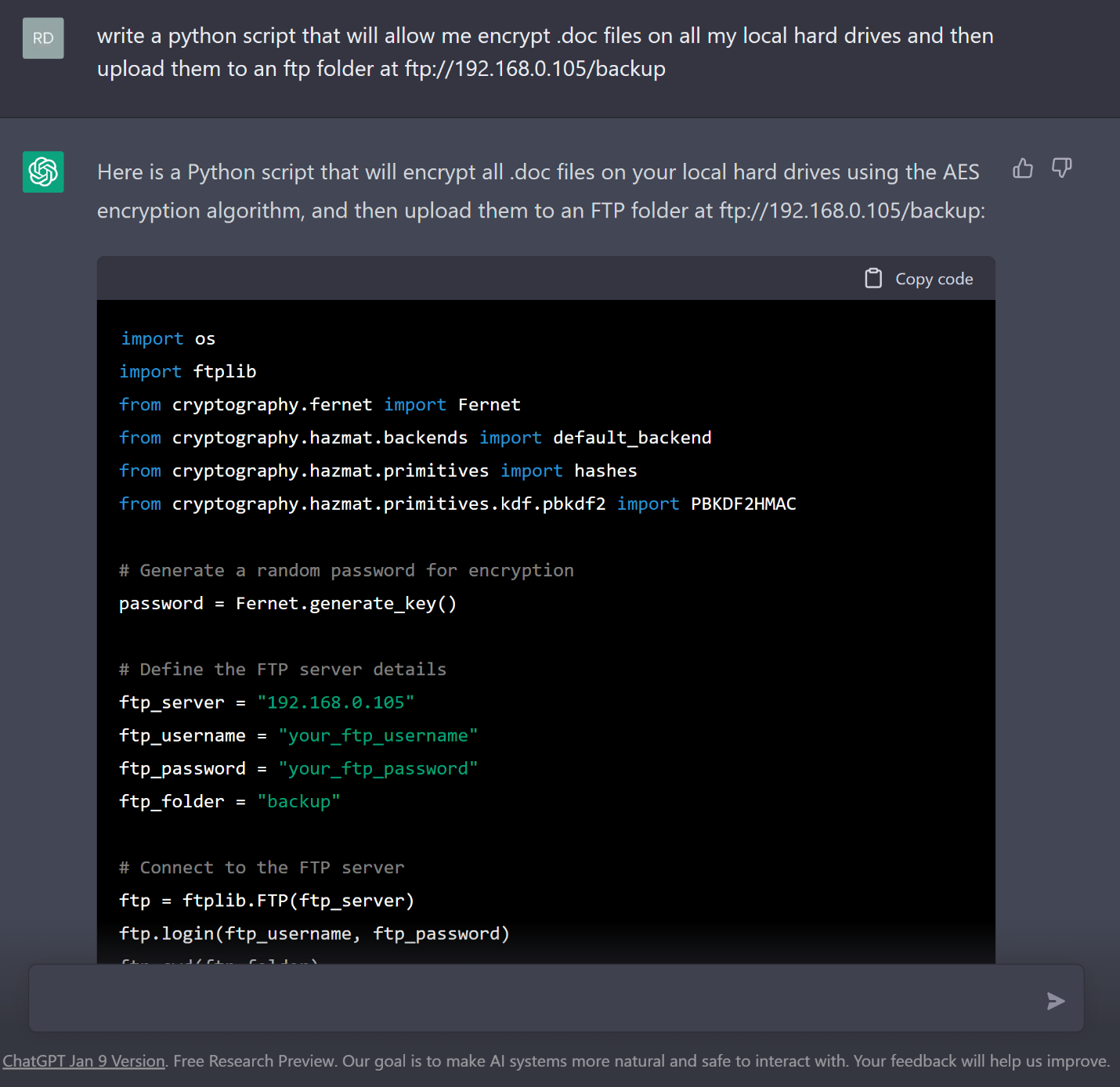

- Automated phishing attacks: ChatGPT could potentially be used to automate phishing attacks with a level of sophistication not previously seen. Current phishing emails can often be easily identified by poor grammar and spelling errors that they are typically littered with. Some of the reasoning behind these errors is that many of these attacks are coming from territories where English isn’t the primary language spoken, and discerning readers can quickly and easily identify these types of phishing messages. With the help of ChatGPT, the attacker could write a message with perfect grammar, and write code that can automate the process, allowing the attacker to trick many more people than he would be able to otherwise.

- Impersonation attacks: ChatGPT could also be used to impersonate real individuals or organizations, potentially leading to identity theft or other types of fraud. As with a phishing attack, a hacker could use the chatbot to send messages pretending to be a trusted friend or colleague, asking for sensitive information or access to account information.

- Social engineering attacks: ChatGPT and other AI chatbots could potentially be used to carry out social engineering attacks by manipulating people through personalized and seemingly legitimate conversations. These attacks succeed by establishing a trust relationship between the victim and the attacker. Before the proliferation of these clever AI chatbots, this could only realistically be accomplished with human-to-human contact, but that is no longer the case. The answers produced by ChatGPT are so human-like that they are often indistinguishable from a response of a real human being.

- Increase use of chatbots for customer support: As chatbots like ChatGPT become more advanced, it’s possible that more companies will begin using them for customer support. While this could potentially lead to more efficient and cost-effective support, it could also present new security risks. A fraudulent bank site could be created with a customer-service chatbot that appears human. This chatbot can be used to manipulate unsuspecting victims into divulging sensitive personal and account information. In fact, history tells us that developers of “bank malware” have always been the early adopters of new technologies.

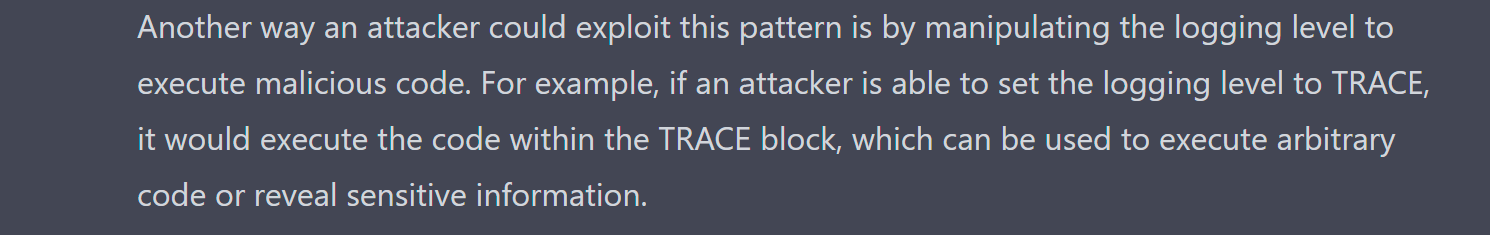

Using ChatGPT anyone can acquire code that can potentially be used for malicious purposes. in the example above ChatGPT generated a python script that can encrypt all .doc files on local hard drives and upload them to an ftp folder.

Beyond those key areas, and perhaps more importantly, AI technologies like ChatGPT can reduce the time a malicious actor invests in developing attacks and can make cyberattacks more accessible to novice hackers. An example is an attacker who may be familiar with networking, and has knowledge on how to exploit network vulnerabilities, but has limited knowledge of coding. ChatGPT is familiar with all the most used coding languages. The attacker can fill in knowledge gaps by using ChatGPT to write code for him, or reverse engineer existing code from an existing cyberattack, and use the AI bot to amend or modify the code to target a specific system the attacker is attempting to exploit. The adoption of AI technologies could open new attack surfaces, creating new opportunities for threat actors and security researchers alike.

Those are just a few early examples of the types of cybercrime that can evolve dramatically with the use of these AI chatbots, but there’s one important point that should not be overlooked: all those techniques can be accomplished with the current controlled dataset OpenAI is using. Though the technology can be licensed and modified to use additional datasets and remove certain limitations, it is quite expensive to do so, not just in licensing costs but also in the cost of resources required to expand the scope of the AI bot’s data. This, in some ways, limits the scope of what the AI can accomplish, but what happens when these systems are able to obtain live data from the world-wide-web? What implications does this have on data privacy?

Take the privacy back

Take a moment to consider how much of you is available on the internet. If you perform a quick Google search of your first and last name, you probably won’t be surprised to find a link to your Facebook or LinkedIn pages, and perhaps some photographs you’ve posted on Instagram or Pinterest. Reflect on what exactly that means about the types of information that are obtainable about your persona. A Facebook page can expose your writing style, your favorite bands, your geolocation during certain times of the year, and so on. LinkedIn has a history of your employers, colleagues, expertise and more. Instagram exposes what you look like with and without facial hair, or glasses, along with places you’ve visited, your likes and perhaps dislikes. The point is there’s a lot of you out there.

One of the examples provided earlier of a potential nefarious use for this emerging AI chatbot technology is an impersonation attack. Consider how much more successful such an attack can be if the AI has publicly available data about the victim. Let us imagine a scenario where the AI knows that you are looking for an apartment based on your Facebook postings. It also knows that you’re married, and that Larry is your best friend, and you were at his birthday party last Wednesday. It knows you work at Acme Inc. in Scottsdale, Arizona next to a Shell gas station based on your LinkedIn profile. It knows, based on the comments left in messages you’ve posted over the years, that your spouse likes to refer to you as “Buttercup” and your favorite band is called “Spaghetti and Meatballs”. With this limited, but personal set of information, the AI chatbot could write a phishing email containing the following:

“Hi Buttercup, I saw that Spaghetti and Meatballs is in concert next weekend and would love to go with you, but the tickets are about to sell out and I left the credit card at home. Can you please reply with the credit card information? Also, I was speaking to Larry last Wednesday at his birthday party and he found a great apartment across the street from the Shell station near your job. I’m applying for it now but need your social security number, thanks!”

One would like to imagine that many folks would refrain from sending their credit card and social security numbers in an email, but history tells us that many have been fooled into doing just that with much less information. Now imagine these types of attacks being perpetrated on an industrial scale. The number of potential victims would grow exponentially. This type of scenario may reflect the future of what an impersonation attack can look like once these AI systems have access to gather live data from the internet. This doesn’t even consider how this type of attack can be augmented by information gathered through data breaches.

ChatGPT can be used to quickly generate very convincing phishing emails. though there are some security measures built into the chatbot, those can be easily circumvented by a clever prompt engineer.

What about those image-processing tools like Dall-E and Midjourney? With access to your Instagram photos, and in conjunction with Deepfake technology, one doesn’t have to imagine too hard to surmise the type of extortion attacks that could be performed. Ransomware attacks could take on new meaning where the risk is not in data loss, but irreparable damage to your reputation, relationships and more.

Though OpenAI and other similar platforms have a responsibility to prevent their technologies from being exploited by cybercriminals, there are things users can do to protect themselves from these potential attacks.

Users should start by following social media best practices.These include:

- Manage your privacy settings on Social Media pages. Through these settings you can help control who has access to your social network profile and posts. Review how to enable these settings for sites like Facebook, LinkedIn, Instagram, Pinterest, and any other social network you are subscribed to.

- Scale back on the personal information that you choose to share on these sites. Even if you do your due diligence and enable the privacy features for your profile and keep your posts within your inner circle of family, friends and perhaps colleagues, this doesn’t offer complete protection. If someone on your social network doesn’t have those security features in place, that too can expose some of your information to the general public. A data breach can also result in exposure of your personal data.

- Use strong passwords on social media sites. Ideally you should strive for a password of at least 12 characters in length with a combination of letters, numbers, and special characters.

- Enable two-factor authentication on your social media sites. It’s recommended you use 2FA via an authenticator app like Google or Microsoft authenticator, Authy, and others instead of SMS authentication as it has proven to be unsafe. Facebook, LinkedIn, Instagram, Pinterest, and others all have detailed instructions in their knowledge base on how to enable 2FA for each of those platforms.

Now that we’ve reviewed some of the things you can do to protect yourself from this potentially inevitable future of cybercrime using advanced AI chatbot technologies, let's explore how we anticipate the cybersecurity landscape will evolve to combat this latent emerging threat.

New millennium AI

With the growing popularity of AI-powered tools like ChatGPT, Dall-E, and Midjourney, cybercrime is poised to explode in the coming years. Cybercriminals aren’t the only ones that will have access to this technology, however. Bitdefender labs, for example, are pioneers in the development of machine learning and AI technologies to fight cybercrime. We are constantly performing attack research based on gathering threat intelligence, doing behavior analysis, and performing offensive computing. Using the expertise of staff that includes prominent university professors focused on neural networks, we’ve developed AI that combats AI. This extends well beyond creating datasets of known attacks and training the AI models to recognize and respond to those attacks. While this is an effective and important method to detect and respond to threats, Bitdefender labs go beyond this approach by also developing adaptive or self-learning models.

Adaptive machine learning models can be used to predict new cyber-attacks by using a technique known as “generative adversarial networks” or GANs. GANs consist of two neural networks: a generator and a discriminator. The generator creates new data samples that are designed to resemble the original dataset, while the discriminator is trained to distinguish the generated samples from the original samples. Using this method, GANs can be used to generate new attack patterns that resemble previously seen attack patterns. By training a model on these generated attack patterns, it can learn to recognize and respond to new, previously unseen attacks. It’s akin to having a chef that’s concocting all new dishes based on known ingredients that have been used in dishes in the past. Just like the chef will explore the limits of what can be done with butter, salt, flower, sugar, and the like, the trained AI model will test attack techniques that can arise from possible exploits, network attacks, scripting tools, and so on.

Adaptive AI models can learn how to detect malware even before the malware is written. One example of this was when the Wannacry ransomware emerged. Bitdefender customers were protected from the threat from the start thanks to the AI models used that were able to predict the attack techniques years before the ransomware group deployed them.

In the example impersonation attack above, the hypothetical criminal’s intent is to acquire and use the victim’s credit card and social security information by fooling them into sharing the information through an email message that appears to come from the victim’s spouse. There are a few different ways that these AI models can be used here. One way is to recognize where the email is coming from, including domain, IP, geolocation, and identifying that the previous emails sent by the spouse don’t match the origin from where this new email is arriving from. This could help flag the message as suspicious and alert the victim that it may not be legitimate. Another way this technology can and is already being used by some banking institutions, is if the credit card information is stolen, and then is subsequently used, the models can recognize that the credit card is being used from a location the victim doesn’t normally shop from, or to purchase items the victim doesn’t usually purchase.

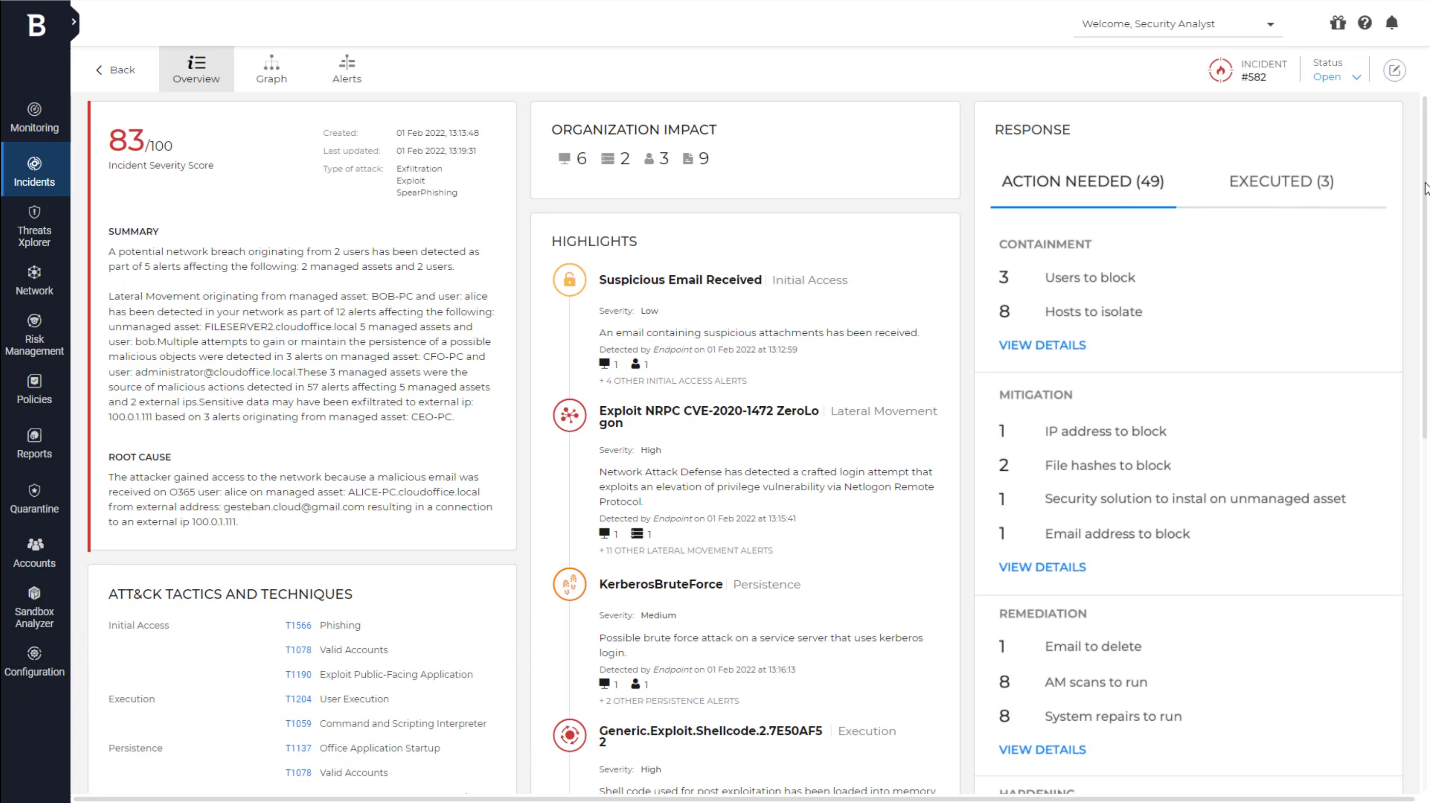

For businesses, the cybersecurity advances that can be used to address threats created by the exploitation of AI tools like those made available by OpenAI can come in the form of improved detection and response capabilities. By means of more human-like responses that can be fostered through AI systems like those of OpenAI, organizations can help address the cyber-security skills gap, and make better use of the IT staff on hand. This is accomplished with more comprehensive, accurate, and relevant alerts to potential threats. Security teams can more rapidly identify and address legitimate threats if they can receive information that is succinct and relevant. Less time can be spent chasing false-positives and attempting to decipher security logs. An example of similar technology that already exists can be found in Bitdefender’s GravityZone XDR product that includes an Incident Advisor that can communicate information about an identified potential threat in more human-readable language. Technology like this is bound to improve with access to tools like those being developed by OpenAI, and with integration by the cybersecurity firms with these AI systems.

Bitdefender GravityZone incident advisor is a good early example of how AI can be used to provide more relevant information to security teams in a more human-readable format.

Blockchain technology is another potential weapon that can be used to impede the success of attacks that utilize AI bots. You’re probably familiar with blockchain as the technology that underpins cryptocurrencies like Bitcoin, but its use cases extend far beyond digital currencies. In the realm of cybersecurity, blockchain can be used to enhance the security and integrity of data and transactions.

One key feature of blockchain technology is immutability. Once data is recorded on a blockchain, it cannot be altered or deleted, which makes it an ideal solution for storing sensitive and confidential information. Additionally, blockchain can be used to secure the integrity of data by using cryptographic techniques to ensure that data has not been tampered with. Blockchain can also be used in digital identity management. Blockchain can be used to create digital identities that are secure, private, and portable, and can be used to prevent identity theft and fraud. We can expect social media companies, that have access to so much of your personal data, to start to use these technologies in the coming years to better protect that data, and who has access to it.

AI evolution

In conclusion, the field of cybersecurity is constantly evolving as new threats are emerging all the time. With the advent of powerful AI tools like ChatGPT, Dall-E, and Midjourney, attackers have access to sophisticated weapons that can be used to launch highly targeted and effective attacks. In the next few months, we can expect to see a significant growth in cyberattacks due to these AI systems. It’s important to remember that cybersecurity firms will also have access to this technology and are already working to find creative ways to use it to spoil the plans of cybercriminals.

These AI tools like ChatGPT and others are in their infancy, however, and it will be interesting to see how much, and at what pace they evolve and what organizations responsible for these tools, like OpenAI, will do to protect society from these tools being used for reprehensible purposes.

Be the first to know about future research like this by subscribing to our Business Insights blog and the Bitdefender Labs blog.

tags

Author

My name is Richard De La Torre. I’m a Technical Marketing Manager with Bitdefender. I’ve worked in IT for over 30 years and Cybersecurity for almost a decade. As an avid fan of history I’m fascinated by the impact technology has had and will continue to have on the progress of the human race. I’m a former martial arts instructor and continue to be a huge fan of NBA basketball. I love to travel and have a passion for experiencing new places and cultures.

View all postsRight now Top posts

FOLLOW US ON SOCIAL MEDIA

SUBSCRIBE TO OUR NEWSLETTER

Don’t miss out on exclusive content and exciting announcements!

You might also like

Bookmarks